Robotics and computer vision are helping to shape the future of off-road equipment towards autonomy

This article was first published in the iVT Magazine, Annual Review 2021

By Marco Pedretti and Andrea Incerti Delmonte, Computer Vision & Robotics Engineers at TTControl

While fully autonomous cars will not be seen widely on our roads very soon, automated off-highway machines are likely to be launched before. Already, mobile machinery manufacturers face rapidly increasing complexity with the implementation of electronic, sensing, and software architectures. Derived from a strong customer demand for more efficiency, repeatability, and higher throughput, the answer for handling this challenge is obvious and lies in more automation. However, success is bolstered by the right strategy that should include access to technology, empowering operators, and the vertical integration of suppliers who have the right know-how at their disposal.

Enabling Technologies

Sensors, algorithms, and high-performance computing platforms are key components which are required to achieve the needed levels of automation. First, sensors perceive the surrounding environment and produce huge amounts of multi-modal data. Then, sophisticated algorithms need to extract meaningful information and drive the decision-making process. Classical embedded architectures cannot satisfy this exponentially increasing computational demand. Therefore, high-performance computing (HPC) architectures are needed to optimize such workloads. Off-highway OEMs can benefit from huge engineering and economical resources that have already been invested in self-driving car programs. Sensors, algorithms, and computing platforms, considered merely as research tools just a few years ago, are now becoming available for real-world adoption. These platforms have now achieved the robustness and reliability required for typical off-highway scenarios.

Automation Empowers Operators

It needs to be mentioned that removing or replacing the operator is not the main goal. On the contrary, general trends are pushing toward the deployment of advanced (driving) assistance systems (ADAS). Part of today’s operative tasks will be removed from the operators’ shoulders, allowing them to focus on value-added tasks exclusively. In another scenario, by decreasing the overall task complexity of the machinery, it will be possible to reduce the training efforts for qualifying operators to handle machines properly. In other scenarios, an additional increase in machine dimensions is predicted to boost productivity and efficiency. Giving a dedicated example from construction sites provides the situational awareness to detect humans and obstacles around the machinery. Hence, overall machinery and operator safety will be improved. Another example comes from agriculture where producers of high-value crops, like fruits and vegetables, can highly benefit from real-time process optimization. As a consequence, by saving resources like water and chemicals, the environmental impact can be reduced significantly.

Vertical Integration - the Common Thread

The main ingredients to develop the above-mentioned automated functionalities are deep market understanding and know-how. It inheres a wide range of multidisciplinary competencies (from electronics to machine learning and robotics). Usually, the latter is dealt with a set of specialized supply chains, leaving the non-negligible integration effort to the OEM. However, the complexity at stake demands a vertically integrated approach, where a single supplier acts as a single point of contact.

Twenty Years of Expertise

TTControl is optimally positioned to help OEMs follow this approach. The joint-venture company of TTTech and HYDAC International is a leading supplier of electronic control systems, operator interfaces, and connectivity solutions for mobile machinery. As an early adopter, TTControl has years of experience in integrating new technologies into cutting edge products and systems, enabling its worldwide customers to provide reliable and innovative solutions to their markets.

With the latest investments in research and development in computer vision and artificial intelligence domains, TTControl is strengthening its focus on new technologies, along with its core products.

Holistic Approach

While machinery equipment expertise is part of the OEM, TTControl follows a holistic approach by tackling the three central aspects of the challenges in automation, with high-performance computing HPC platforms, the dedicated TTC Application Development Centre and an internal R&D team specialising in multi-modal perception and data fusion.

On the R&D side, TTControl can leverage its well-established network of international partners, like the recently co-funded CovisionLab and sister company TTTech Auto, which ensures a vast knowledge transfer from the automotive industry to the mobile machinery sector.

Latest News from the R&D Lab

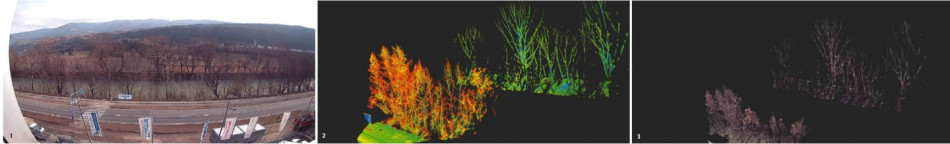

TTControl’s internal R&D team is focusing on data fusion. This focus is due to the high perception complexity that is needed for automation functionalities. Since a unique sensor that fulfills all requirements does not exist, a combination of several sensing modalities is mandatory. In particular, the data fusion technique allows the correlation of multiple data sources, both in time and space. Hence, more reliable and meaningful information can be provided. The so-called “object DF” is a common data fusion strategy where each single sensor data stream is pre-processed separately. The fusion is performed at a later stage in the pipeline and operates with low dimensional information (e.g. object lists). This approach forces the user to define strategies to deal with sensor disagreements. Instead of this, a “raw DF” approach combines raw sensor data that allows the exploitation of the strengths of heterogeneous sensing modalities. In practice, this helps to extend the vehicle’s operational area to a wider range of scenarios and limits the number of false positives.

The picture below shows an example of how to fuse raw data of an RGB camera (1) and LiDAR (2). LiDAR data can provide precise ranging information, while the camera provides a detailed semantic understanding of the scene. By fusing these two data sources (3), it is possible to create multidimensional data by associating the color information to each 3D point. Then the fused data can serve as input for deep learning-based perception algorithms.

Paving the Way for the Future

TTControl is ready to support customers in preserving their first-mover advantage on the autonomy of their mobile machinery. With its holistic approach, TTControl will be the vertical integration partner needed for such complex and demanding projects. This will allow OEMs to focus on its unique value proposition like use case definitions, machine functionalities, or advanced user experiences. This collaborative framework will lead to efficient and successful long-term co-development relationships, shifting mobile machinery to a new level of automation.